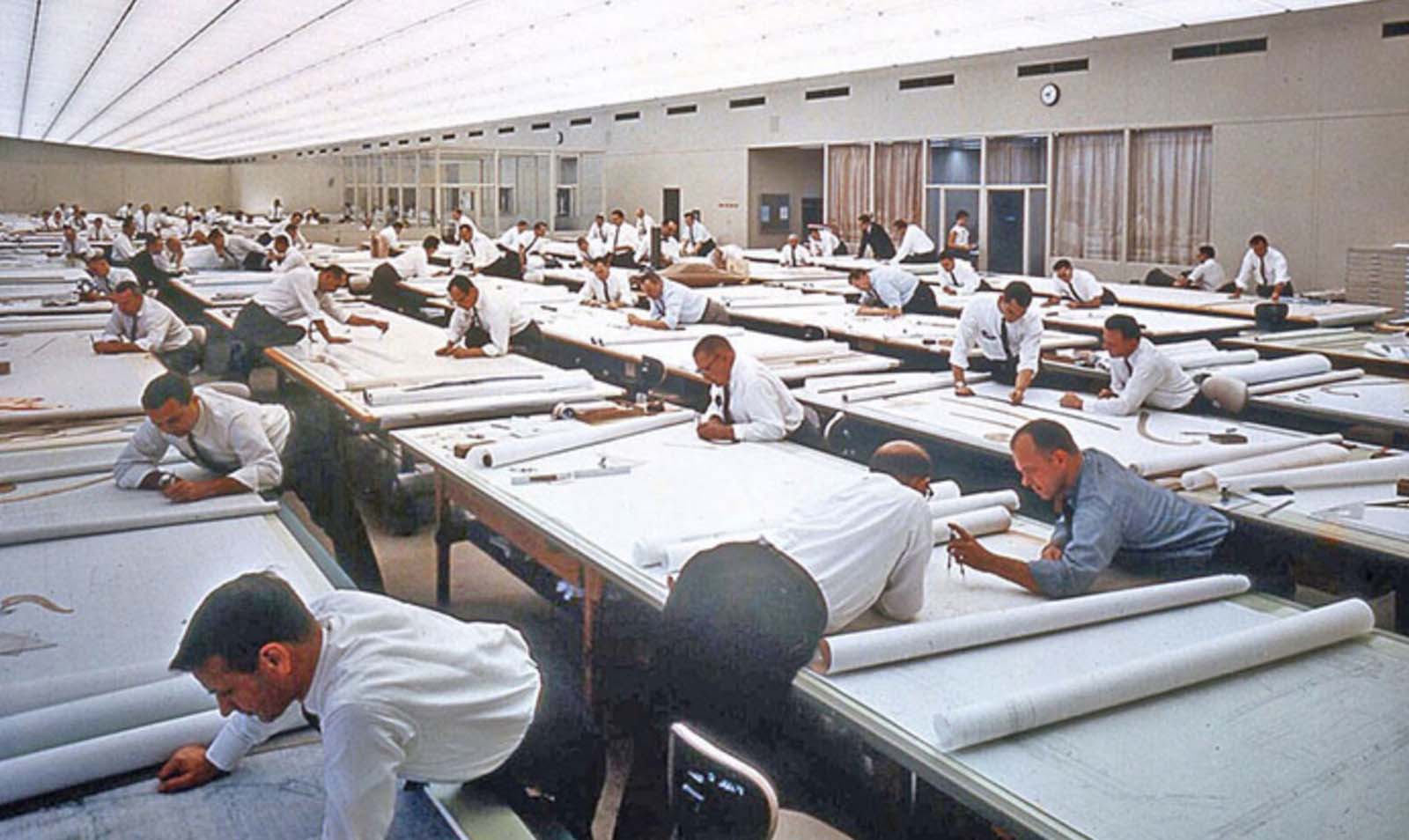

For decades, the engineering design landscape has been quietly undergoing a transformation, largely due to the relentless advancement of digital technology. 3D CAD modelling has overtaken traditional drawings on pen & paper, and the advent of sophisticated simulation software has allowed engineers to replace numerous physical tests with faster, cost-effective physics-based simulations.

Simulating large-scale, high-fidelity nonlinear models using a physics-based approach can be a time-consuming task, often requiring several hours or even days to complete. When conducting system analysis and design, the need for thousands or even hundreds of thousands of simulations poses a substantial computational challenge.

These challenges caused substantial investments from engineering firms to explore enhance the efficiency of data-intensive solutions.

The Rise of Digital Twins: Transforming Design Across Industries

A Digital Twin is a virtual representation of a physical object or system that mirrors its real-world counterpart in a digital environment. This digital replica is created using data from sensors, IoT devices, and other sources, allowing it to simulate the behaviour, characteristics, and performance of the physical entity in real-time or over time. Digital Twins serve as powerful tools for understanding, analysing, and optimising complex systems.

As computer capabilities have surged, engineering teams have leveraged the power to craft highly detailed digital models that replicate a product's multitude of characteristics and expected behaviours. These digital twins are completely changing the way how products are designed, work, and maintained across a multitude of industries, from industrial machinery to medical devices.

Empowering Computers in the Engineering Process

The digitisation of engineering has empowered digital twins to play a more proactive role in the design process. Techniques like Finite Element Analysis, Computational Fluid Dynamics or generative design and optimisation involve instructing computers to perform hundreds or thousands of simulations, iteratively refining the design parameters until the optimal solution is found. The outcomes often surpass the capabilities of even the most seasoned human designers.

Limitations in Digital Design Optimisation

Despite its immense potential, digital design optimisation or physics-based simulations face significant limitations. Simulating complex structures or fluid dynamics relies on computationally intensive differential-equation solvers, constraining design optimisations to a few parameters at a time. This limitation results in the exploration of only a small portion of the design space and yields incremental improvements at best.

In today's ever-evolving landscape, where the dynamics of competition dictate the pace at which enterprises must operate and innovate, the conventional approach to simulation finds itself at a crossroads. It stands on the precipice of obsolescence, no longer fully equipped to meet the demands of a rapidly changing world.

Engineering firms, in their quest for excellence, are confronted with the imperative need to reassess their methodologies and technologies. The conventional paradigm of design optimisation must undergo a transformative shift to remain relevant in this era of heightened competition and perpetual innovation.

Engineering companies are forced to explore novel approaches, driven by new technologies and methodologies that promise to redefine the boundaries of what is possible in the realm of product development.

A New Frontier: Deep Learning Surrogates (DLS)

A surrogate model is a computational technique designed to replicate the behaviour of a costly simulation, serving as an efficient approximation.

To express this concept more formally, consider the scenario where you aim to optimise a function, denoted as f(x), but each computation of f involves significant computational expense.

This could involve solving a partial differential equation (PDE) for each data point or employing intricate numerical linear algebra techniques, typically entailing substantial computational resources. The surrogate model concept revolves around the development of an alternative model, denoted as s, which emulates the behaviour of f.

This emulation is achieved by training s using historical data gathered from previous evaluations of f.

The construction of a surrogate model unfolds through a three-step process:

- Sample Selection: Initially, a representative set of data points is selected to capture the variability and characteristics of the function f.

- Construction of the Surrogate Model: With the selected data points, the surrogate model s is constructed. This model aims to mimic the behaviour of the costly function f while offering computational efficiency.

- Surrogate Optimisation: Once the surrogate model s is in place, it can be utilised to optimise or approximate the function f with significantly reduced computational cost.

Deep Learning Surrogates (DLS) are a specific category of surrogate models that leverage the power of deep neural networks to approximate and replicate the behaviour of computationally expensive simulations. Similar to traditional surrogate models, deep learning surrogates are employed when optimising functions, such as f(x), which involve costly computations, such as solving complex partial differential equations or intricate numerical linear algebra operations.

The key innovation with deep learning surrogates lies in their use of deep neural networks, often convolutional or recurrent networks, to create a highly flexible and adaptable surrogate model. These networks are trained using historical data collected from previous evaluations of the expensive function f, enabling them to capture intricate patterns and relationships in the data.

Engineering companies are exploring this approach that promises to accelerate and enhance automated design optimisation, even for larger and more complex engineering challenges.

The Power of DLS: Reducing Complexity and Boosting Speed

DLS technology can significantly reduce computational complexity and dramatically increase speed. Deep learning models usually operate at speeds orders of magnitude faster than traditional physics simulations (let's ignore training time of the neural network for a second...😉). This speed surge translates into several competitive advantages, including reduced time to market, lower engineering costs, and the ability to explore a broader range of parameters for product design.

Demystifying Deep Learning Surrogates (DLS)

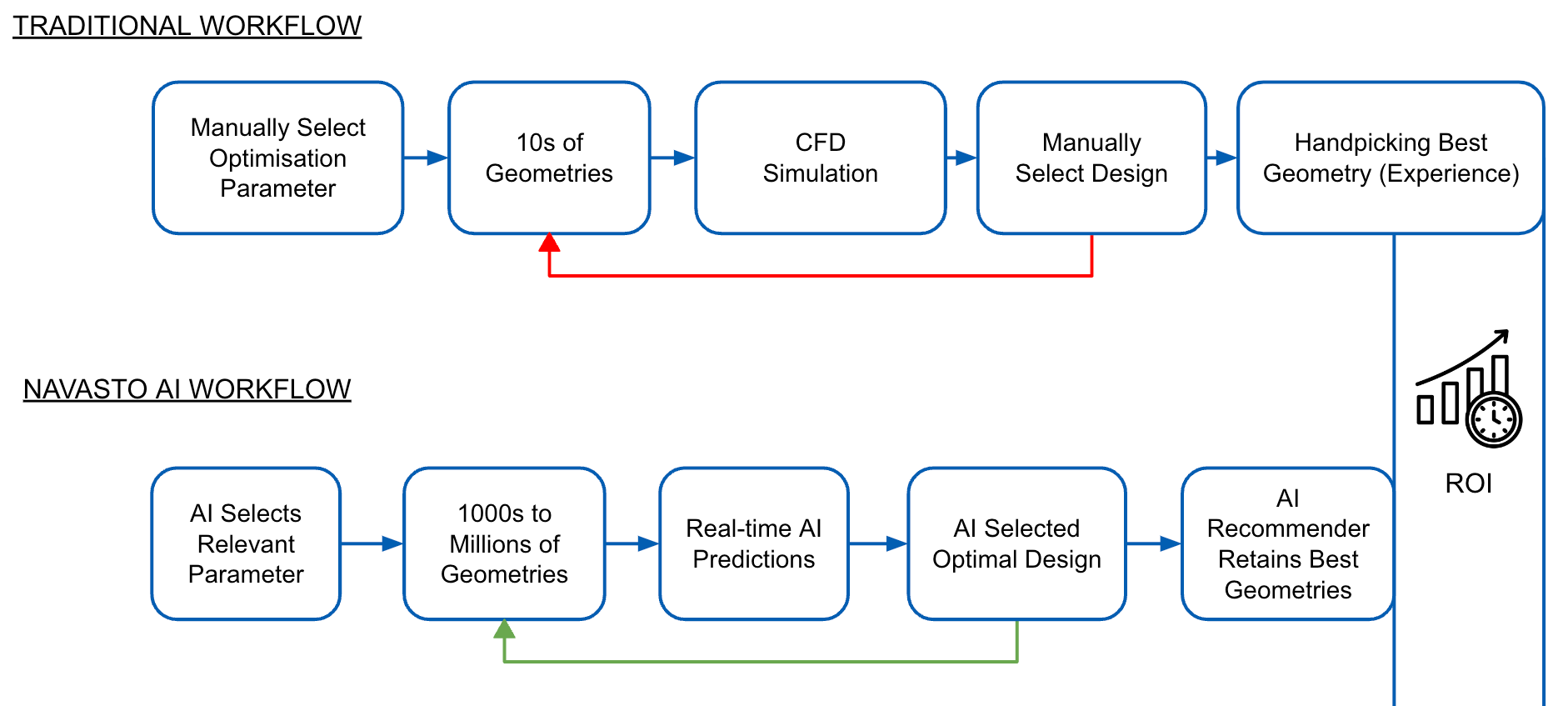

At its core, the DLS process bears resemblance to other digital design optimisation methods. Engineering teams define product constraints and desired performance criteria, while computers execute multiple conventional simulations for different design options. However, the key differentiator lies in what happens next.

During these initial simulations, a neural network is concurrently trained. This neural network is designed to receive the same inputs as the conventional simulation and replicate its outputs.

Once training is complete, this deep learning model functions identically to the conventional simulation but at a significantly accelerated pace. In practical applications, deep learning simulation models operate orders of magnitude faster than their traditional counterparts.

Embracing deep learning in product development necessitates more than just technological adoption. It entails restructuring engineering departments and reallocating resources in innovative ways. This may involve recruiting new talent, particularly data scientists, data engineers, and machine learning specialists, and securing access to new computing resources, either in-house or through cloud services.

Reduced Order Models vs. Deep Learning Surrogates - What's the Difference?

Reduced-Order Models (ROMs) and Deep Learning Surrogates (DLS) are both powerful tools in the realm of approximating complex and computationally expensive simulations. However, they differ significantly in their fundamental approaches and methodologies.

DLS rely on neural networks and deep learning techniques. These models are data-driven and excel at capturing intricate relationships in the absence of prior knowledge about the underlying physics. DLS demand substantial volumes of training data, usually consisting of input-output pairs from high-fidelity simulations. They are often considered as "black-box" models, lacking the interpretability of traditional mathematical models. Understanding why a DLS makes specific predictions can be challenging. However, their versatility is a notable strength, as they can tackle a wide range of problems, especially those characterised by complex, non-linear relationships. DLS thrive in data-rich environments where traditional physics-based models might struggle to capture the intricacies of the system.

Conversely, ROMs lean on mathematical and analytical techniques. Their primary objective is to reduce the dimensionality of intricate simulations while retaining their core characteristics. Methods such as Proper Orthogonal Decomposition (POD), Proper Generalised Decomposition (PGD), and Reduced Basis Methods are commonly employed in the construction of ROMs. These approaches often demand a limited dataset from high-fidelity simulations, usually comprising snapshots of the simulation at various parameter settings. ROMs are typically more interpretable, as they are grounded in mathematical principles, allowing engineers and scientists to gain valuable insights into the underlying physics or behaviours of the system. ROMs shine in scenarios where the physical principles are well-understood and dimensionality reduction can be effectively applied.

DLS in Action: The Navasto Case Study

Deep learning surrogates are already making waves (no pun intended) in challenging engineering environments.

The International Maritime Organization (IMO) has set ambitious efficiency targets for new ships, demanding a 30% increase in efficiency by 2025. Achieving this necessitates a deep understanding of how changes in hull design impact overall performance and operational costs, requiring a delicate balance between competing objectives.

The integration of surrogates within design synthesis processes offers a solution to complex design considerations in maritime engineering. By training a Reduced-Order Model (ROM) using simulation data from Design of Experiment (DoE), real-time predictions of new geometries, flow fields, and scalar performance values become attainable. This approach not only accelerates design processes but also fosters interactive design collaboration between disciplines.

In the world of ship design, engineers are confronted with multifaceted challenges, including stringent emissions regulations, the pursuit of efficiency optimisation, and the need for future-proof propulsion systems. Recent advancements in Artificial Intelligence (AI) have opened up new horizons for ship design by enabling real-time predictions of flow fields and scalar quantities. This breakthrough expedites product development processes, ultimately driving innovation and efficiency in the maritime industry.

This case study exemplifies the application of parametric models and simulations using CAESES and Simcenter STAR-CCM+. Machine learning models, grounded in geometric parameters, expedited the design process significantly.

The capabilities of machine learning models hold the potential to optimise product designs, enhancing performance, safety, and sustainability in maritime assets. The ROM, generated from simulation data, enables rapid decision-making, reducing the need for iterative meetings and allowing for on-the-fly iterations.

In conclusion, ROMs and DLS in engineering are making significant strides. Machine learning models, combined with surrogates like the ROM, are predestined to change engineering, offering more efficient, environmentally friendly, and innovative ship designs. This evolution represents a transformative shift in how ship designers approach complex challenges, promising a brighter future for the maritime industry.

Moreover, the utilisation of machine learning in product development is gaining momentum, with leading companies like Audi, Airbus, Lamborghini, and Volkswagen incorporating AI into their engineering processes. This shift towards AI-driven design and optimization tools heralds a new era where products continually improve based on the performance of their predecessors, leading to more efficient and innovative solutions.

The Future Unfolds: Deep Learning in Engineering

As we look ahead, the application of deep learning in product development appears to be on the cusp of transformative change. What began as pioneering pilot projects has now evolved into a sweeping integration of Deep Learning Surrogates (DLS) within the standard engineering workflows of industry leaders across diverse product categories. This adoption trend signifies a shift towards more data-driven and efficient design processes.

Yet, the journey of DLS is far from over. Researchers and engineers are pushing the boundaries, seeking novel ways to leverage the potential of deep learning within the design process itself. One such frontier involves a bold departure from traditional simulation methods. Instead, a groundbreaking approach is being explored: training deep learning models using real-world data gleaned from the performance of existing products in the field.

This paradigm shift holds the promise of empowering companies to enhance their products. Imagine design and optimisation tools that not only expedite the development process but also possess the capacity to autonomously learn from the experiences and performance of previous product generations. It's akin to having a virtual mentor that continuously evolves and adapts, leading to ever-improving designs.

In this landscape of innovation and transformation, the future is undeniably promising. As deep learning continues to assert its presence and reshape the engineering design and optimisation landscape, we stand on the brink of a new era where the synergy of human creativity and artificial intelligence drives unprecedented advancements in product development.

The journey is just beginning, and the possibilities are limitless.

If you’d like to see more blogs about AI in engineering, consider subscribing to the blog - and leave a comment down below!

Keep engineering your mind! ❤️

Jousef